Opinion: Reflections on the Connext Sybil Hunter Bounty

When Connext (now Everclear) launched its Sybil Hunter Bounty in August 2023, it marked one of the more interesting approaches to community-driven fraud detection in the Web3 space.

Instead of relying solely on internal teams, the program invited independent analysts and blockchain sleuths to investigate suspicious wallet clusters — rewarding successful reports with a share of recovered tokens. This was not just a technical challenge, but a rare opportunity to bring the community directly into the security process.

What worked well

The bounty structure was a strong motivator. A transparent 25% reward on recovered tokens gave hunters a tangible incentive to invest time, skills, and resources into their investigations.

Impact at a glance:

-

Nearly 10% of eligible addresses were removed from the airdrop list.

-

Millions in token value were protected from coordinated farming.

-

The open format attracted diverse investigative approaches — from large-scale graph analytics to meticulous manual wallet tracing — that might not have been possible in a closed, in-house review.

This demonstrated that well-structured community programs can achieve results at scale and across multiple analytical methodologies.

Room to improve

Platform choice & workflow

GitHub, while familiar to many in the Web3 space, was not an ideal submission and review environment. The sheer volume of issues, comments, and parallel submissions quickly created noise.

Suggestion: Use a dedicated platform for submissions with clear categorization, duplicate detection, and private reporting options. This would make collaboration smoother and reduce accidental overlaps.

Data access & security

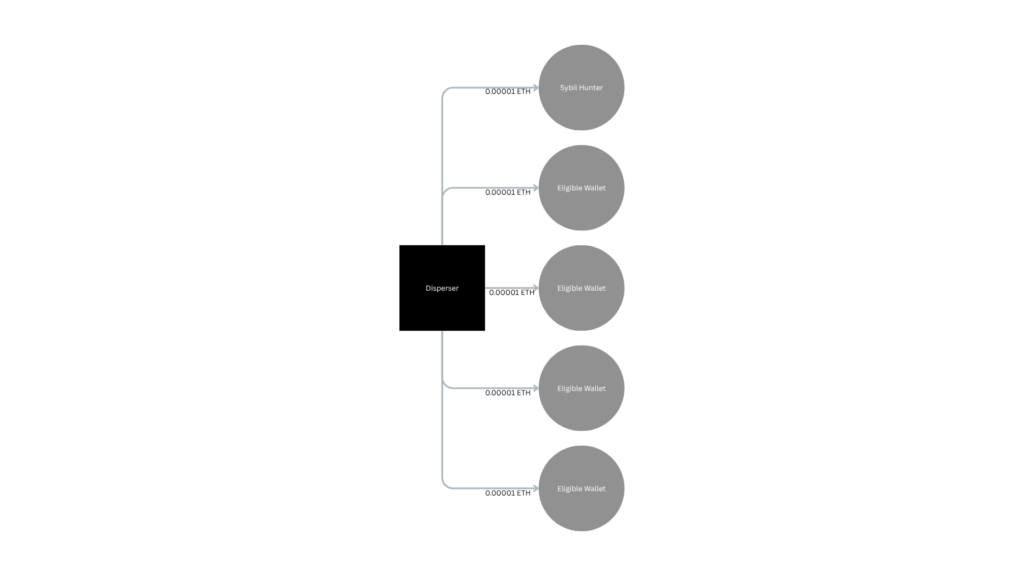

During the program, all eligible wallet addresses were public. This openness came with a downside — it enabled wallet poisoning, where very small transfers (e.g., 0.0001 MATIC or 0.00001 ETH) were sent from a dispenser to numerous eligible wallets, including at least one Sybil hunter address.

On-chain examples:

Better access controls, temporary anonymization, or hashed address lists could have prevented such manipulations.

Transparency in the review process

While outcome data was eventually published, more transparency during the review phase could have helped participants understand decisions in real time — especially for borderline or rejected clusters.

A structured feedback loop would allow investigators to refine their approach mid-program, improving quality without sacrificing fairness.

Fairness in “first-report wins”

The “first report, first serve” rule sometimes rewarded speed over accuracy. In some cases, while one participant was still validating data, another could quickly submit a partial or overlapping report.

More robust duplicate checks and a short review buffer could ensure quality without discouraging thorough research.

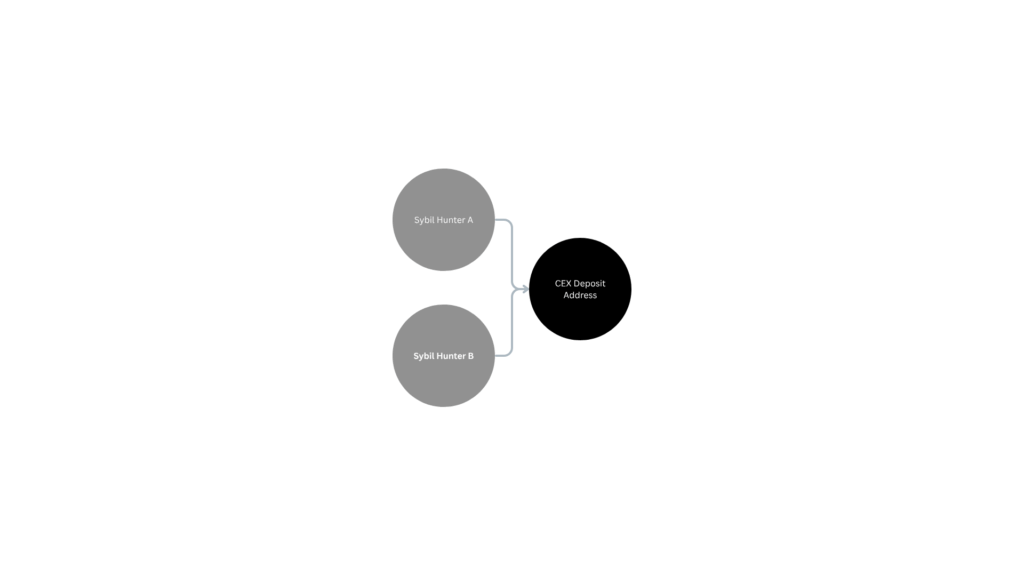

Integrity in participation

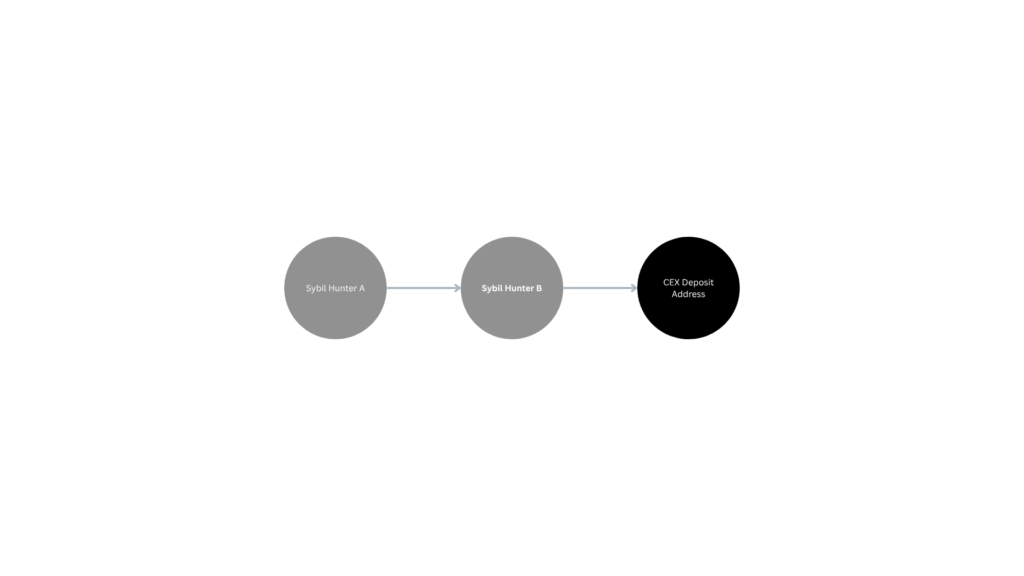

Open bounty systems must consider the possibility of strategic self-reporting or underreporting. While not making accusations, on-chain analysis revealed patterns where multiple hunter addresses appeared linked to the same CEX deposit address.

Addressing such risks could involve optional self-report rewards (encouraging disclosure) and enhanced participant KYC for high-value cases.

Timeline constraints

According to Connext, 507 reports were submitted during the program — but only 361 were reviewed in time for the airdrop. Extending the review period or staggering payouts could improve accuracy while still meeting distribution deadlines.

Eligible wallet list hygiene

The public list included contract addresses, dead wallets, and other non-user accounts, adding unnecessary noise.

A pre-filtered list would have saved time and allowed investigators to focus on genuine human-controlled wallets.

Lessons for the future

Despite these challenges, the Connext Sybil Hunter Bounty was a valuable experiment — and one worth repeating. The nearly 10% removal rate shows that community involvement can make a significant difference in safeguarding token distributions.

With improved tooling, better protection of submission data, and a more transparent review process, future programs could be even more impactful. For analysts like myself, it was a reminder that the best results come from combining open participation with strong operational frameworks.

I would join again — and I believe many others would too.

For a full breakdown of our investigation process and methodology, see our Case Study: Connext Sybil Hunter Bounty – Exposing Cross-Chain Farming Networks.